Jenkins is one of the earliest and still one of the ongoing and continuing integration servers (CICD) that continues. It has a lot of competition lately, but it still has a strong community and various plugins (1,400 last time I checked). Even if you use different automation servers, it is important to understand how to use Jenkins: The basic concepts of CICD have not changed much from one application to another, though vendors tend to structure their own terms.

In this article I will draw on the official Jenkins tutorial, specifically showing you how to use the new Blue Ocean GUI, but add my own explanations and illustrations for steps and codes that may not be obvious. My goal is to get you to the point where you can set up network installation, test, and delivery for your own project.

Jenkins and Docker

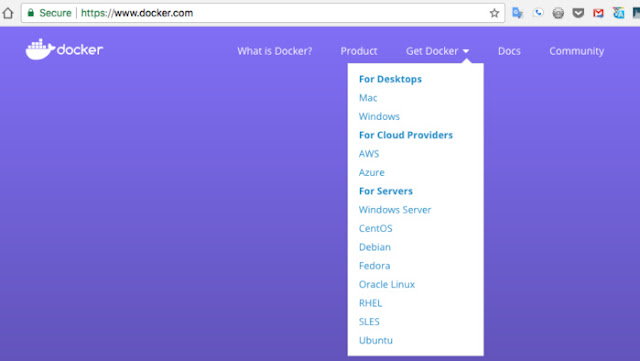

As a matter of convenience, Blue Ocean tutorials run Jenkins in a Docker container. Before you can run the Docker command that will launch Jenkins, you must install Docker and run.

Tutorial: Continuous Delivery with Docker and Jenkins

In this way, we improve the reliability of the release process, reduce risk and get faster feedback. However, the installation of Continuous Delivery pipes can be difficult at first. In this step-by-step tutorial, I'll show you how to configure a simple Sustainable Shipping pipeline using Git, Docker, Maven and Jenkins.

Introduction to Continuous Delivery

The Traditional Release Cycle

After the "old school" release approach means sending release after a certain period of time (say 6 months). We should pack the release, test it, prepare or update the necessary infrastructure and eventually pass it on the server.

What is the problem about this approach?

- Release process is rarely done. As a result, we hardly practice in releasing. Mistakes can happen more easily.

- Manual steps The release process consists of many steps that must be done manually (shutdown, set up / update infrastructure, deployment, restart and manual tests). Consequences:

- Mistakes are more likely to occur when performing these steps manually.

The whole process of releasing is more difficult, impractical and takes more time.

There have been many changes made since the last release 6 months ago. That possibility

something will go wrong when trying to combine different components (eg conflict version, side effects, incompatible components) or

that there is a bug in the app itself.

But it's hard to see what changes are causing the problem, because there have been many changes. The point is that the problem is too late. We got late feedback, because we tried to give up the application too late in the development process and not routine. We're just trying to let go, when we really want to make a release.

All in all, we have a high risk that something is wrong during the release process or the application will contain bugs. The release is dangerous and creepy, is not it? Maybe that's why the release is rarely done. But doing so rarely makes them more dangerous and scary. What we can do?

Continuous Delivery

“If it hurts do it more often and bring the pain forward.”

We reduce the pain of releasing by releasing more often. Therefore, we must automate the entire release process (including packet release, prepare / update infrastructure, deploy, test final) and eliminate all manual steps. In this way we can increase the release frequency.

What are the benefits of this approach?

- Few errors can occur during the automated process compared to the manual.

- There was little change made between the two releases. The dangers of error are very small and we can easily trace them back to the cause of change.

- We do not pack and submit our applications at the end of the development phase. We do it early and often. In this way we will soon find a problem in the process of release.

- Due to the automated release process, we can bring business value faster to production and therefore reduce time to market.

- Implementing our applications into low-risk production, because we only perform the same automated processes for production as we do for testing or pre-production systems.

All in all, Continuous Delivery is about

- reduce risk,

- increased reliability,

- faster feedback,

- accelerated release speed and time-to-market.

Continuous delivery using Docker

From a technical point of view, Continuous Delivery revolves around automation and delivery line optimization. A simple delivery pipe can look like this:

The big challenge is the automated arrangement of infrastructure and environment, our application needs to run. And we need this infrastructure for every stage of delivery of our delivery pipes. Fortunately, Docker is great at creating a reproducible infrastructure. Using Docker we create an image that contains our app and the required infrastructure (eg application server, JRE, VM arguments, files, permissions). The only thing we have to do is execute the images at each stage of the delivery pipeline and our app will run and run. In addition, Docker is virtualized (lightweight), so we can easily clean up older versions of apps and infrastructure just by stopping Docker containers.

Application Examples and Benefits of Using a Runnable Fat Jacket

An example of our app "hello-world-app" is a simple microservice created with Dropwizard. Dropwizard let us make a runnable fat jar that already includes an embedded dock. So we just need to run jars in order to start our microservice. This simplifies the necessary infrastructure (no servlet container should be installed first, no spread of war into the servlet container) and the spreading process (just copy the jar and run it). As a result, the architectural decision to use runnable fat jars significantly facilitates the preparation of the Sustainability pipeline.

Source By : https://blog.philipphauer.de/tutorial-continuous-delivery-with-docker-jenkins/